🌟 Editor's Note

Congrats on joining the community! If you like what you see then please share with your friends so we can iterate and improve and bring you the best newsletter possible! Please also feel free to reach out with feedback and suggestions at [email protected]!

🚀 This Weeks Top Stories in AI

🤖 xAI Raises $20 B to Expand Grok AI and Enterprise Tools

xAI has closed an upsized $20 billion Series E funding round, well above its original $15 billion target, with major participation from investors including Valor Equity Partners, StepStone Group, Fidelity Management & Research Company, the Qatar Investment Authority, MGX, and Baron Capital Group, along with strategic backing from NVIDIA and Cisco Investments.

The capital will be used to expand xAI’s Colossus GPU compute infrastructure—now running over one million H100 GPU equivalents—and accelerate development of core AI offerings including Grok models, Grok Voice, Grok Imagine, and next-generation Grok 5, as well as new enterprise products and tools.

Alongside model R&D, xAI is rolling out enterprise-focused solutions such as Grok Business, Grok Enterprise with admin controls and SSO, and developer APIs (e.g., Collections), positioning itself to compete with leaders like OpenAI and scale across consumer, business, and Tesla/X integrations.

🤖 OpenAI Begins Limited Rollout of GPT-5.2 “Codex-Max”

OpenAI has started testing a new model variant called GPT-5.2-Codex-Max with select paid users of its Codex coding service, spotted when users asked Codex what model it was using.

This variant builds on the advanced GPT-5.2-Codex agentic coding model — designed for long-horizon tasks, better context handling, tool usage, and potentially improved reliability and visual understanding in coding workflows.

Details remain largely unannounced by OpenAI and access appears limited, but “Max” variants historically signal a performance bump above standard models, suggesting enhanced capabilities for demanding software engineering use cases.

🤖 Liquid AI Unveils LFM2.5 — Next-Gen On-Device AI Models

Liquid AI has released LFM2.5, a new family of compact on-device AI models built on its device-optimized LFM2 architecture, designed to deliver fast, high-quality, always-on intelligence on local hardware like phones, laptops, vehicles, and IoT devices.

The LFM2.5 lineup includes multiple open-weight variants — Base and Instruct text models, a Japanese-optimized model, a vision-language model, and an audio-language model — all tuned for strong instruction following, multimodal capabilities, and real-time performance on constrained hardware.

These models extend pre-training to ~28 trillion tokens with advanced reinforcement learning and deliver benchmark performance and efficiency that outpace many other ~1 B-parameter class models, enabling true local AI agents without cloud dependency.

📧 Gmail Is Entering the Gemini Era

Google is transforming Gmail into an AI-powered “personal, proactive inbox assistant” by integrating its latest Gemini 3 AI model, enabling users to ask natural-language questions about their inbox and get concise, context-aware answers without digging through emails.

New capabilities include AI Overviews that summarize entire email threads, Help Me Write and enhanced Suggested Replies to draft or polish messages, and a Proofread feature for advanced grammar and tone checks — with some tools free and others tied to Google AI Pro/Ultra subscriptions.

Gmail will also introduce an AI Inbox view that highlights priority emails and to-dos (like bills or appointments) over noise, shifting from a traditional inbox list toward an AI-curated, task-focused experience; these features are beginning rollout in the U.S., with broader global expansion planned.

🦄 Other Bits

OpenAI just dropped ChatGPT Health

Grok’s deepfake scandal

Some predictions on consumer AI

🏆Tool of the Week (Not Sponsored)

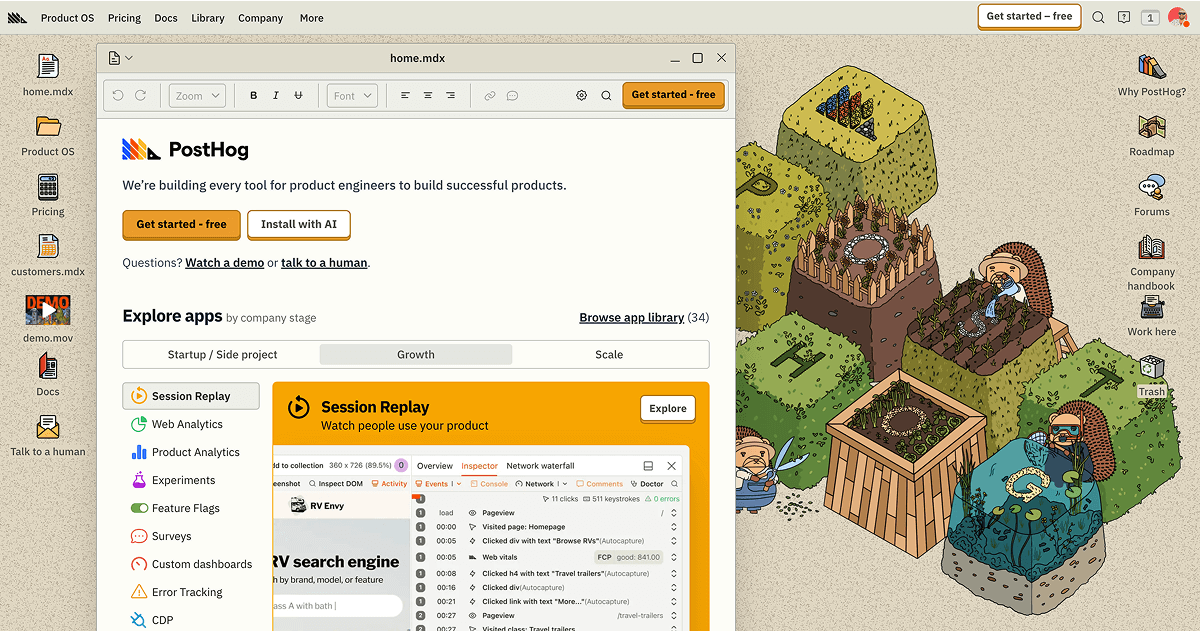

One for the developers… Posthog. IYKYK.

Did You Know? The first computer bug was literally a bug—in 1947, Grace Hopper found a moth trapped in a Harvard Mark II computer, coining the term "debugging" in the process.

Till next time,